Springer Nature’s Manuscript Transfers Service

The problem

A team at Springer Nature was building an automated ‘transfers’ service - an online portal allowing academics to re-submit a rejected manuscript to another Springer Nature journal of their choice. This new product would complement the existing transfers service - a team of subject matter experts available for email consultation on re-submitting papers.

Increasing the number of successful ‘transfers’ to keep rejected manuscripts within Springer Nature was a key goal for the business. And the project had a passionate, visionary stakeholder with many big ideas for improving both the existing service and the new automated portal. But launch of the portal had been stalling due to technical issues, and whether it would succeed with users was still an unknown. The many ideas for improving the portal further remained untested, and morale was low.

The outcome

I helped the team re-evaluate their goals, map out the existing service, uncover quick user experience wins, and pinpoint assumptions and unknowns that we needed to investigate longer term in order to validate our big ideas for the new automated portal.

I implemented a dual track agile approach, working with the team to deliver near term fixes on the backlog alongside collaborating on lean tests to validate some of our business owner’s biggest ideas for new service offerings - all requiring little or no code. This allowed us to learn about whether our ideal future service had legs, at a time when launch of the portal was stalled by technical issues. I helped the team start tracking user behaviour on the portal for the first time, to see if changes were actually making an impact; the team experienced their first user sessions; and everyone got better at collaborating.

The discovery work helped the team prioritise their many ideas, killing some that didn’t show promise. It also helped us optimise the product, and come up with new ideas for repositioning it to better serve users’ needs - including solutions that weren’t dependant on the technology that was causing us issues.

My process

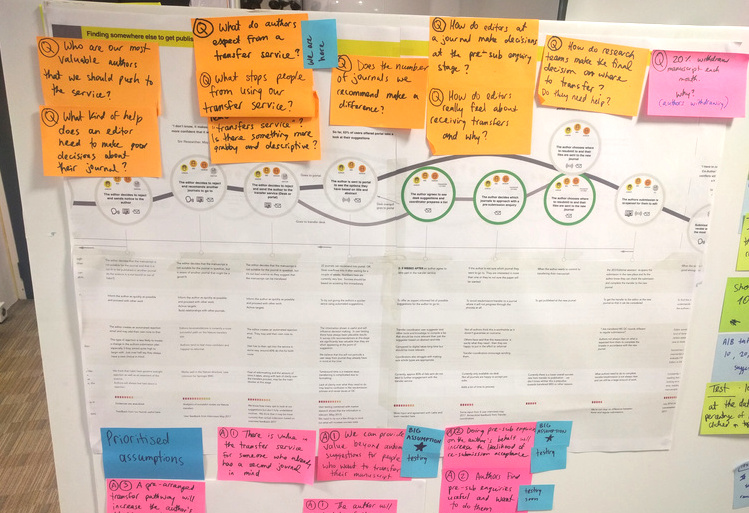

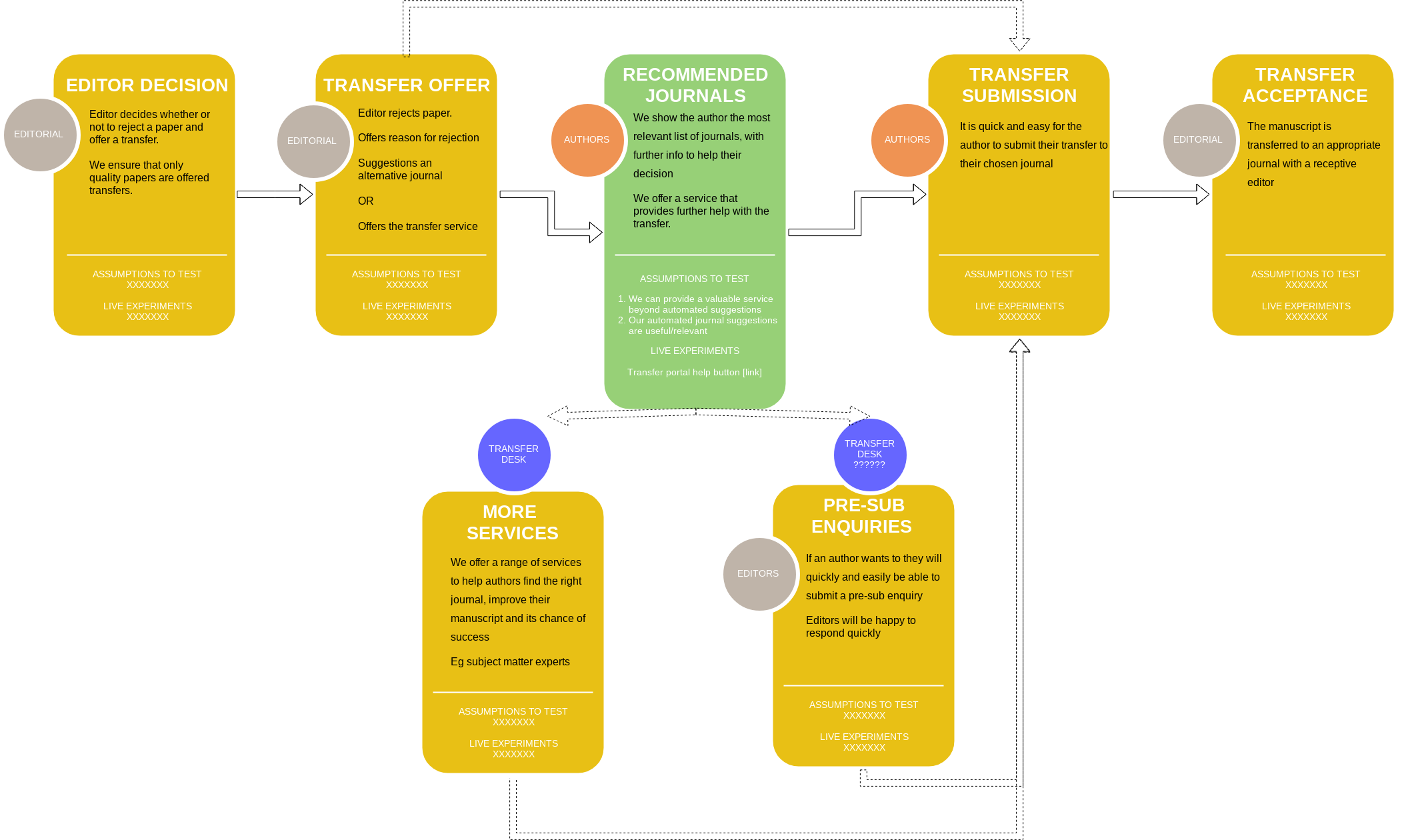

Mapping the current experience and capturing assumptions

I used existing research to create an experience map of the current service, and brought it to the team. The service was complicated, with multiple systems used by academics, journal editors, and our ‘transfers team’ - each group with their own behaviours and goals - but this had not yet been captured.

Manuscript transfers service experience map

Manuscript transfers service experience mapFor some developers on the team, this was their first introduction to how the service actually worked. We used the map as a starting point to discuss the bigger picture - where should we focus our energies, and where were we missing important data to help us make decisions?

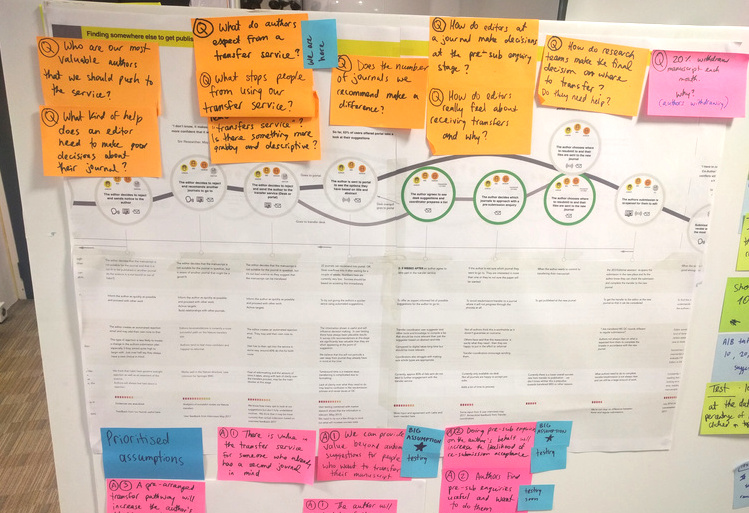

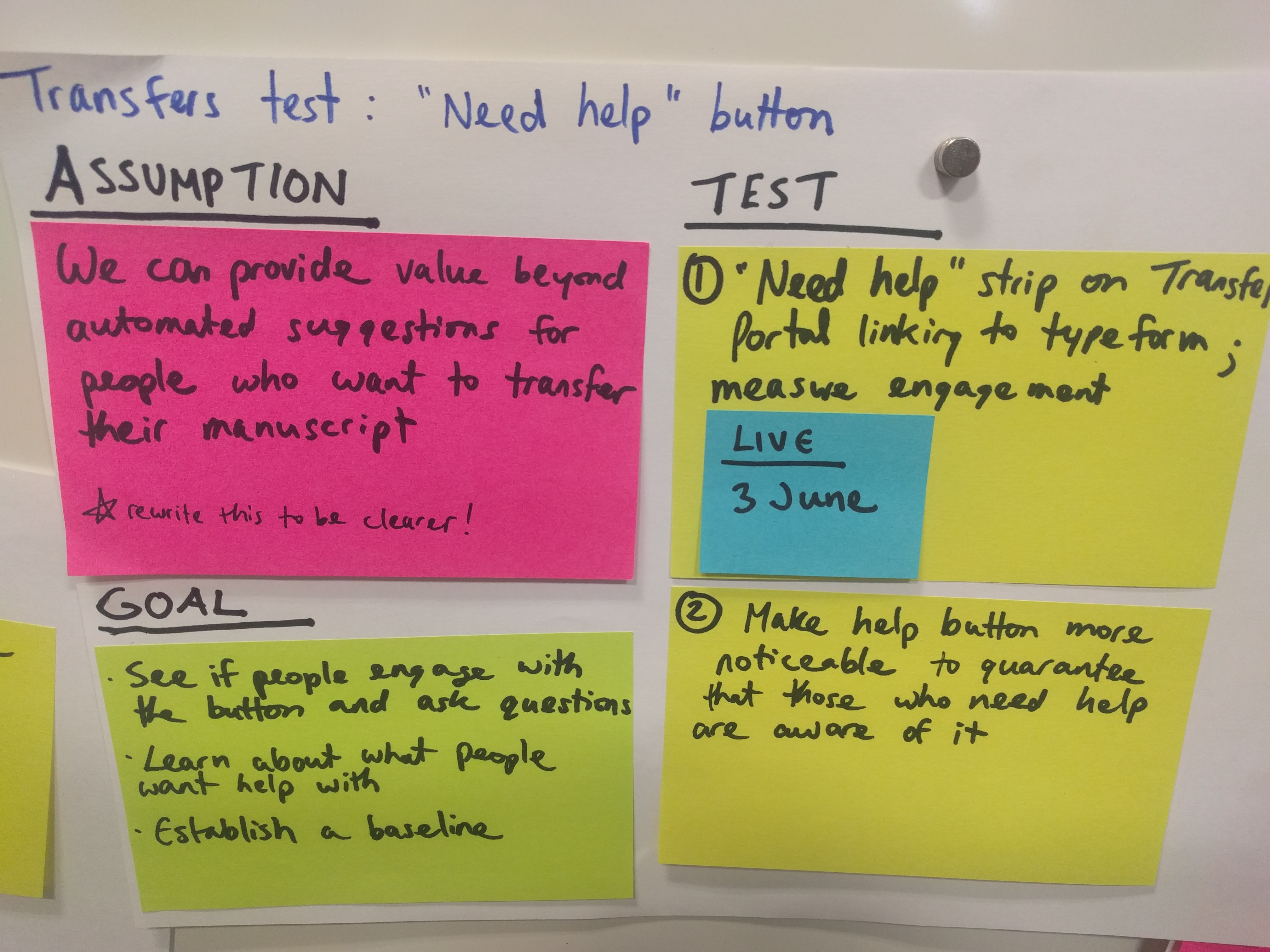

Working with our product owner and business analyst, I ran a session with our main stakeholder where we got all of his ideas for the future service out on post its, phrasing them as assumptions to test. We categorised these and had him prioritise which assumptions to test first.

Assumptions workshop with our main stakeholder

Assumptions workshop with our main stakeholderI added the high priority assumptions to the experience map, so the team was clear about which parts of the journey we’d need to focus on.

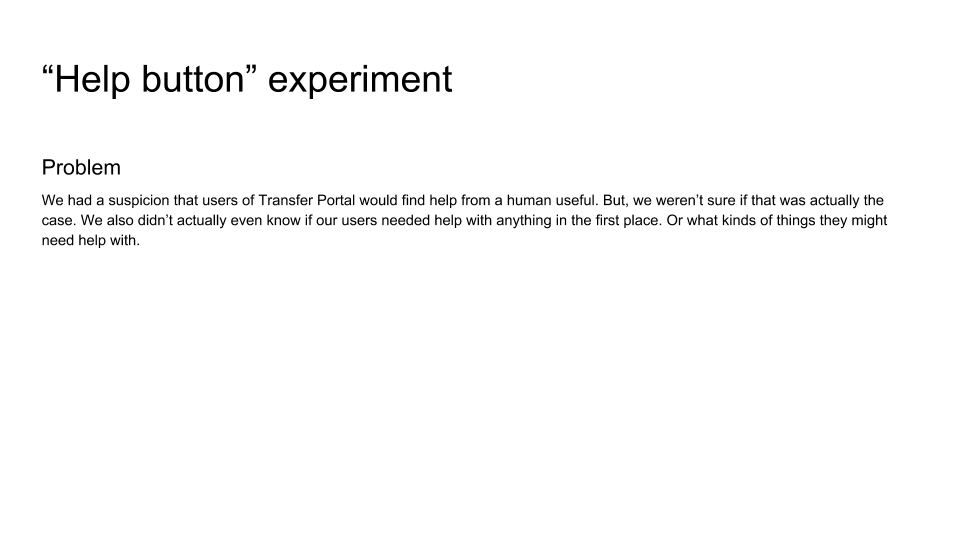

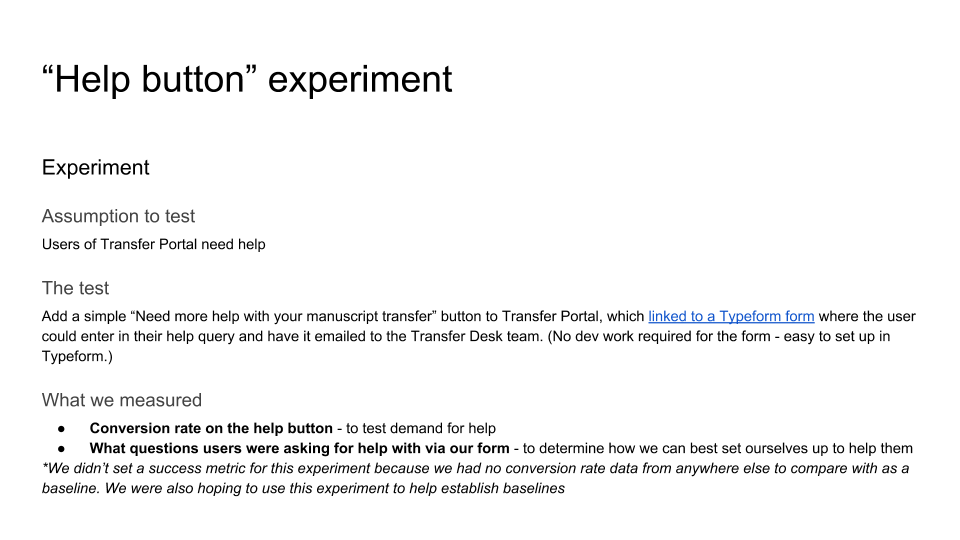

Prototyping new service offerings with minimal code

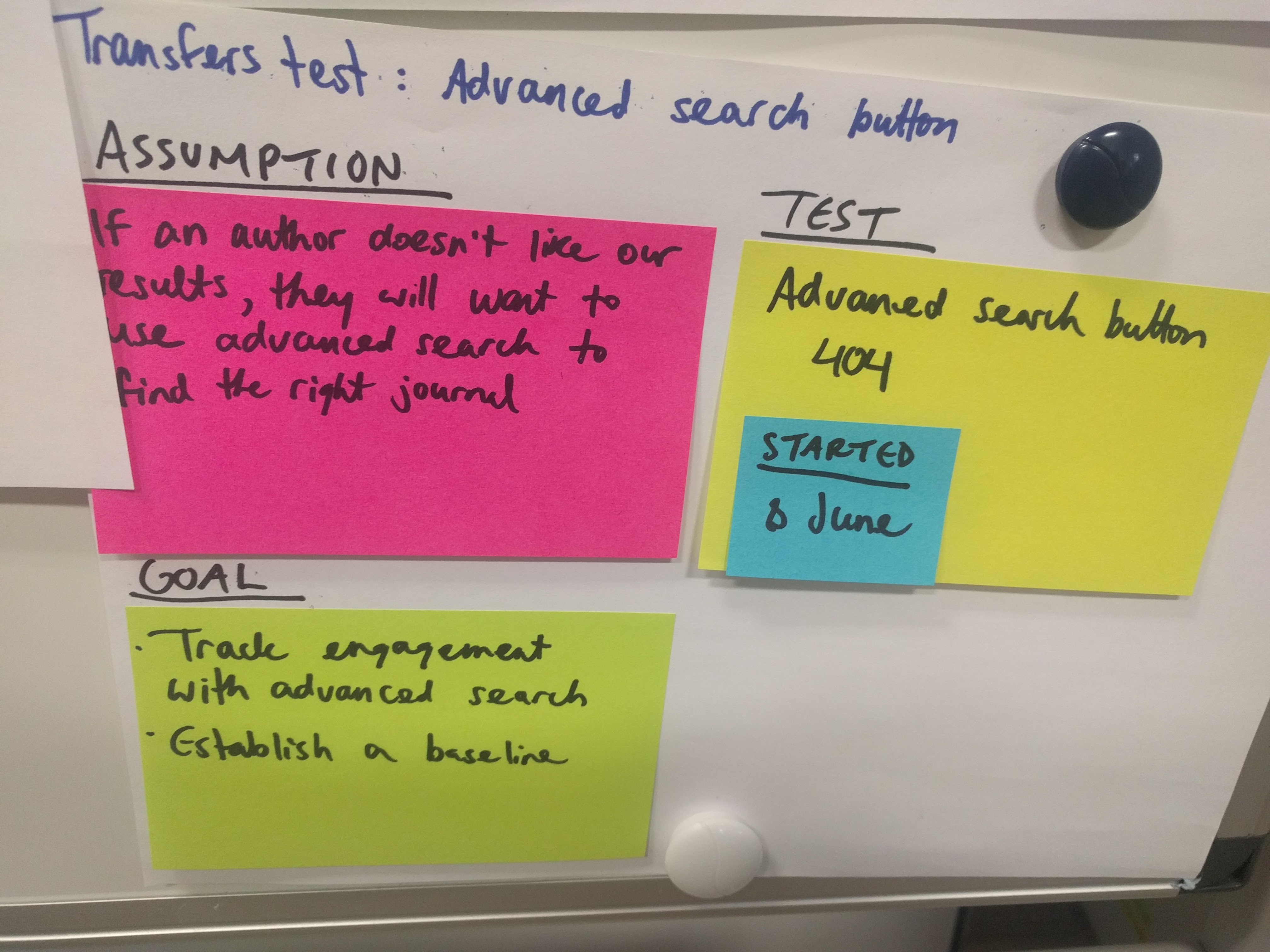

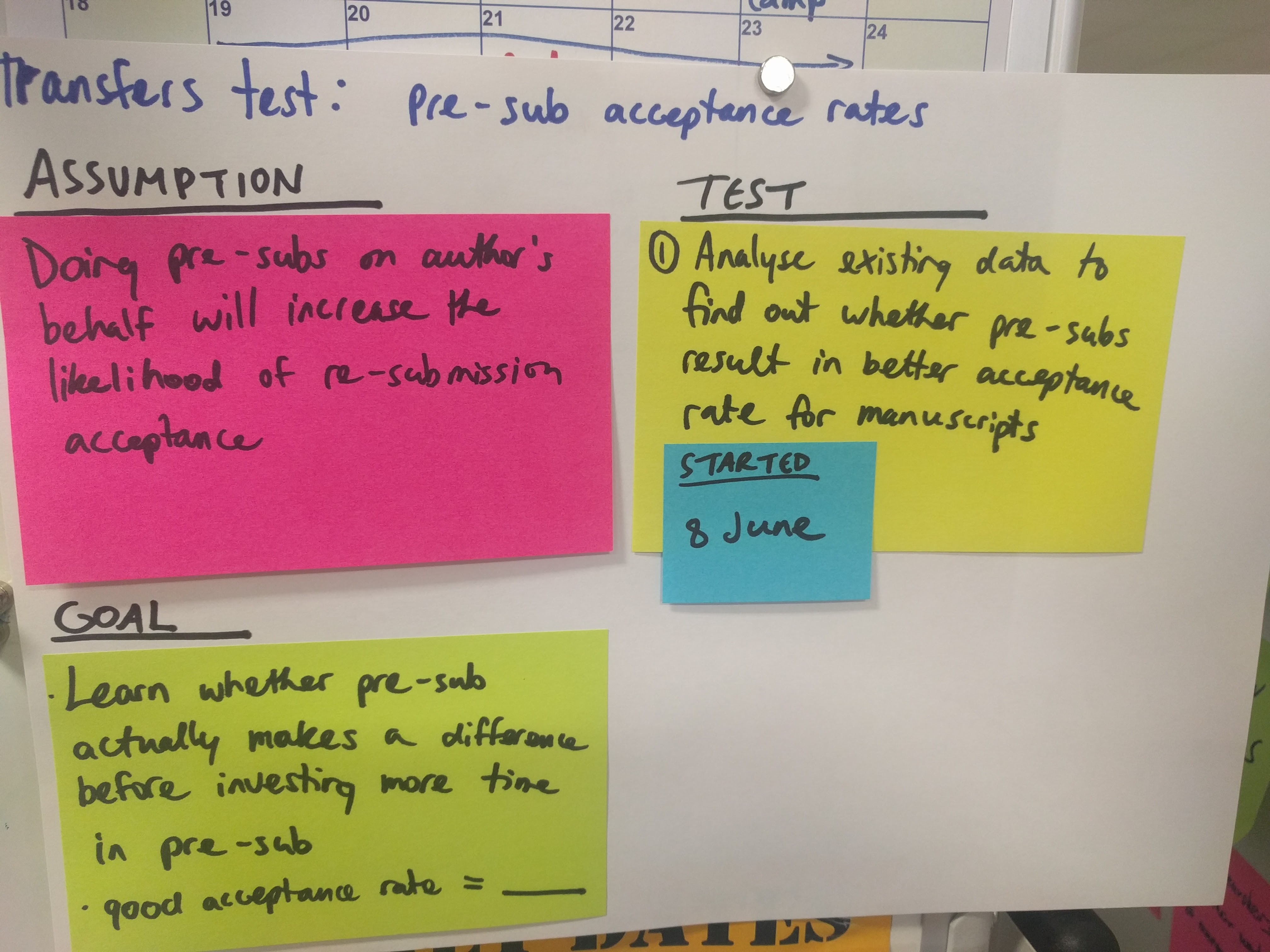

I then got the whole team, including stakeholders, together for a mini assumptions and tests workshop, where we took our prioritised assumptions and devised the leanest ways possible to test them.

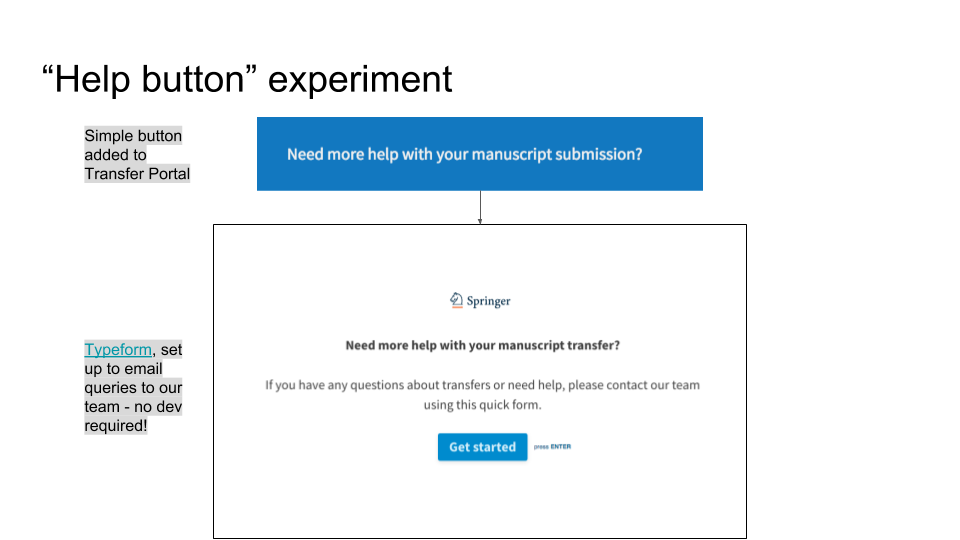

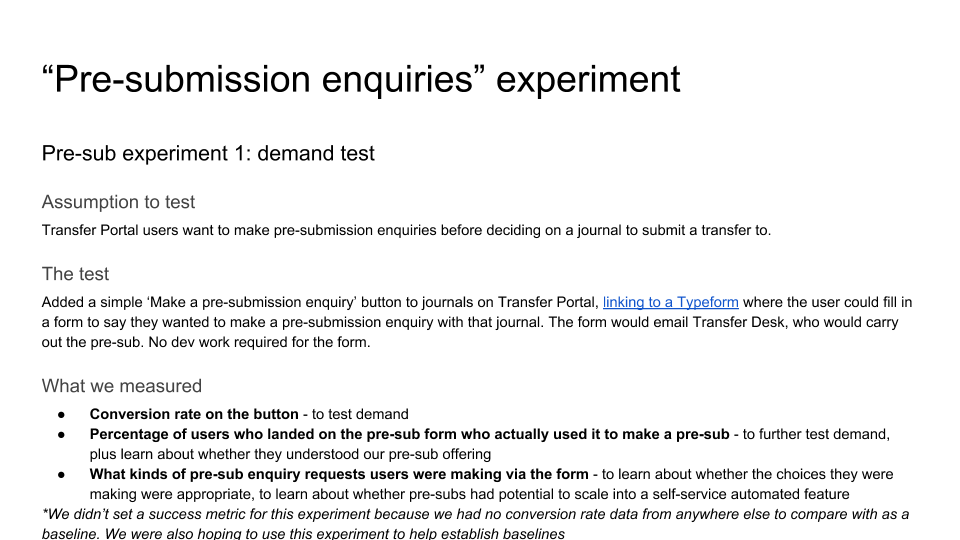

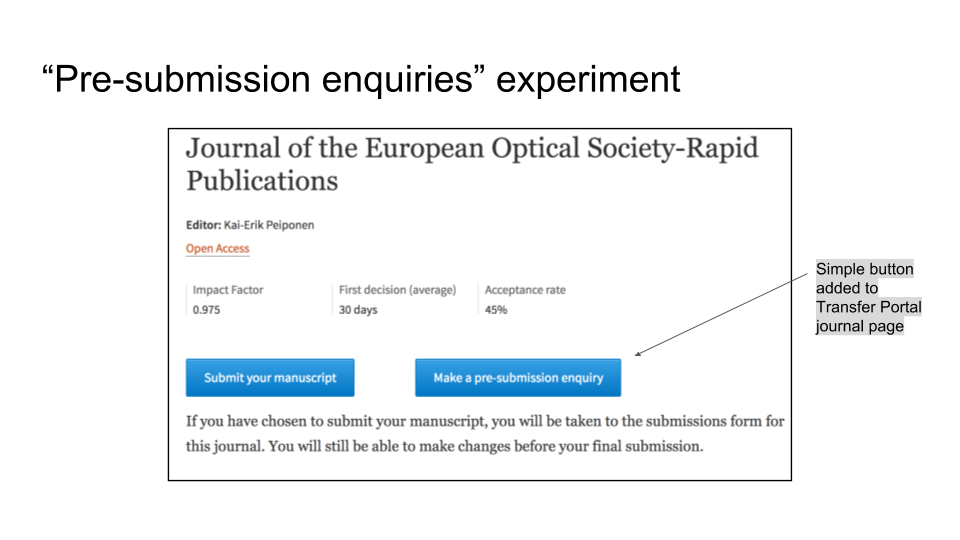

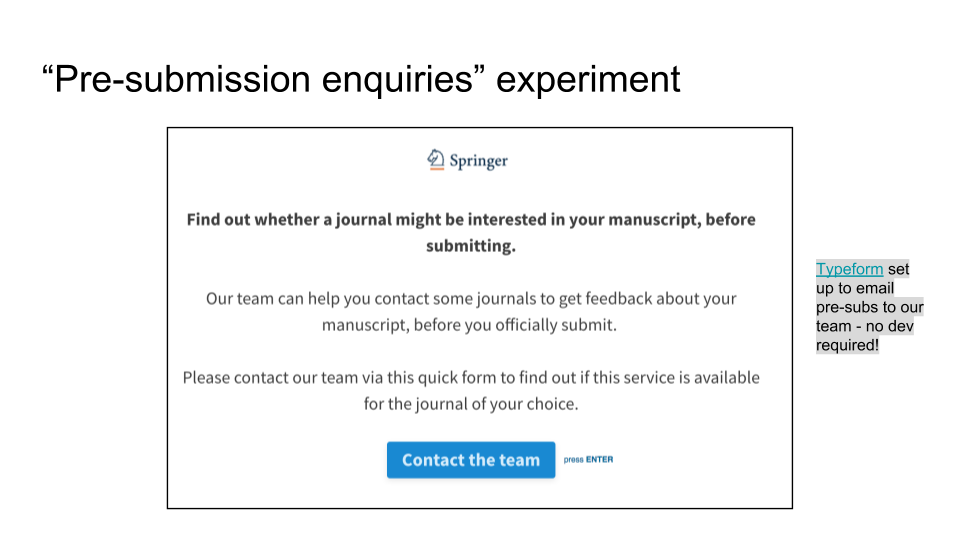

The tests included little to no code, so it was easy for us to start testing immediately. Tests included working prototypes of new potential service offerings: we prototyped a ‘get human help’ feature for portal users by linking to a Typeform, set up to forward user queries to our ‘transfers team’; we used a similar method to prototype a way for academics to check editor interest in their manuscript before re-submitting.

Embedding UX research and design into team workflow

The experience map and assumptions and tests workshop had gone down well with the team and stakeholders. But we still had no processes in place for learning about users continuously, and using our learnings to shape the backlog. So I ran a ‘reset’ workshop for the whole team and our stakeholders, to discuss objectives and how we wanted to work, come up with a vision and principles for our future service, and get on the same page about what we knew and didn’t know about our users.

Our vision, OKRs, and team principles

Our vision, OKRs, and team principles As part of the workshop preparations, I created a high level service blueprint capturing the stages of the manuscript transfer service and the players involved, as well as principles for improved service design

As part of the workshop preparations, I created a high level service blueprint capturing the stages of the manuscript transfer service and the players involved, as well as principles for improved service designWe established that we didn’t actually know what the experience looked like for academics using the existing service, where they were interacting with our ‘transfers team’ by email. We also thought there might be some quick wins in optimising this email journey, starting with the very first email an academic receives when their manuscript has been rejected - the point at which they would be making a decision about whether or not to use our transfers service.

So I worked with the team to print out all the emails and screens our users were seeing. We were then able to assess the journey and suggest improvements, ensuring each interaction was clear, user-friendly and helpful.

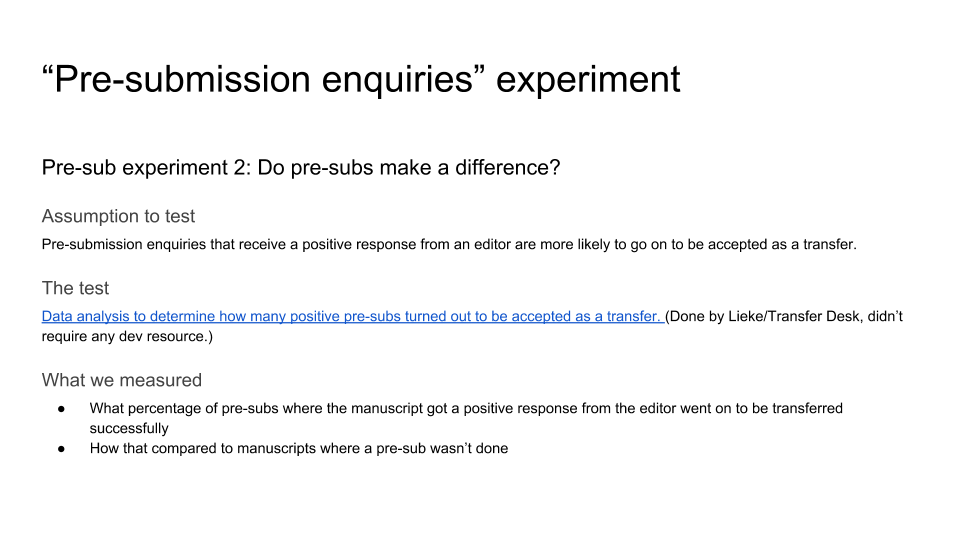

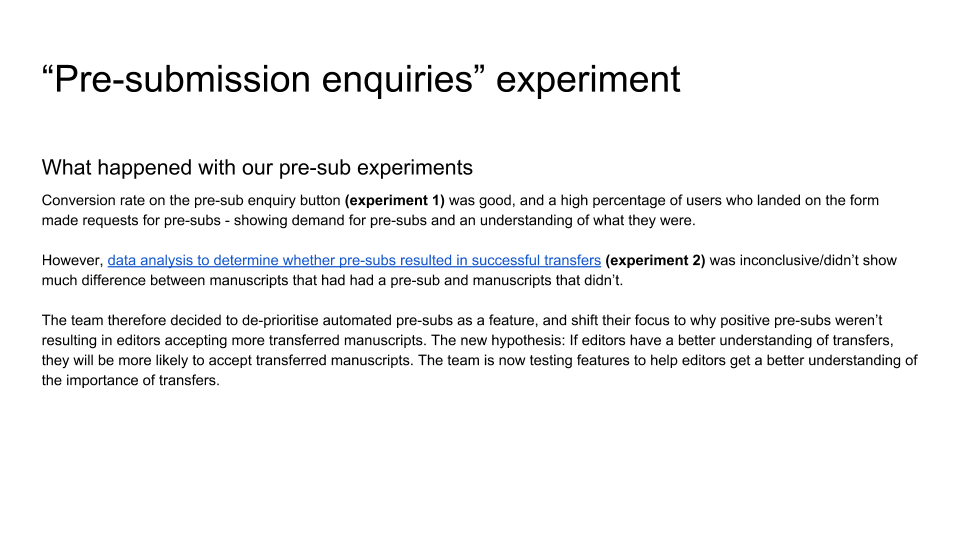

Alongside this, we started to analyse the results of our live tests; Google Analytics was new to the team, so I began working with our developers to ensure it was in place and were collecting the right data. With feedback from the team, I created ‘experiment cards’ to keep track of our tests on our board, alongside the day to day code that was being delivered. I also put in place ‘experiment check ins’ at the request of the team, to report back to everyone on how the tests were going.

When our stakeholder saw our test results, he agreed that we should de-prioritise one big area he’d seen as important; this allowed the team to prioritise our work on optimising the email journey and improving messaging around the existing service.

Team user research sessions to inform strategy

We used what we were learning to prototype an improved experience that brought us closer to the business’s ideal future service; I organised team user sessions to test this on real users, to surface quick usability wins but also answer bigger questions that would help us improve the usefulness of the surface: what’s really going through an academic’s head at the time they hear that their paper has been rejected? What kind of help do they need at the time? How do they feel about receiving recommendations for journals to re-submit to?

The whole team observed the user sessions, and helped with note-taking and analysis, which we did together using post its to group insights into major themes.

Notes and themes from our user sessions

Notes and themes from our user sessions

We discovered some quick usability fixes we could make immediately, but it was clear that there were some major flaws in our service, with strong signals it wasn’t useful, particularly for senior academics. However, there were signs that taking the service in a slightly different direction could prove very useful for less experienced academics - and that pivoting in this way might have further benefits, as it might not require the problematic technology we’d been spending time on.

Because the whole team had taken part in the user research sessions, it was easy to agree that we needed to pivot, and our product owner amended our strategy to include investigations into a new direction. I began working with the team to plan more research to help with this - including collecting more data on who was using and benefitting from the current service. This led to further iterating and testing.

Case studies

Case studies